Edition #2: Agent Security Standards + Identity/Authorization + Secure Agent Engineering + SecureVibes Update

Sandesh Mysore Anand and I recorded a couple of podcast episodes for The Boring AppSec Podcast with Ken Huang and Teja Myneedu over the past few weeks. Below are some key takeaways from them:

Architecting AI Security: Standards and Agentic Systems with Ken Huang

The conversation focused on the necessity of new security frameworks and authentication methods to manage the unique risks posed by autonomous AI agents.

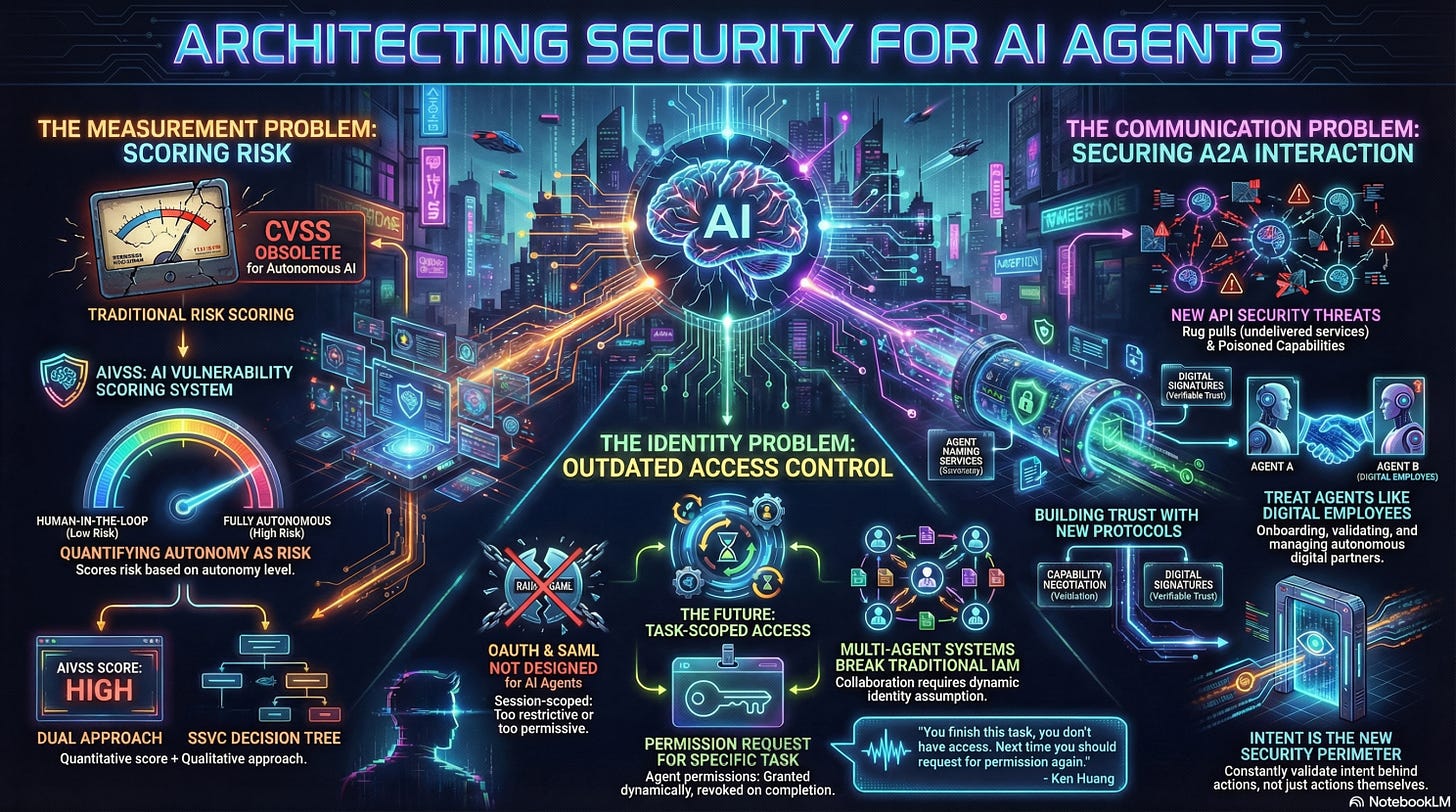

New Standards for Measuring AI Agent Risk: OWASP AIVSS

Ken detailed the AIVSS framework’s purpose and structure.

The Goal: AIVSS aims to provide a way to measure core agent AI security risks to enable better risk management, fitting into the “measure” component of the NIST AI-RMF framework.

Addressing Autonomy: Traditional scoring systems like CVSS are deterministic, measuring code and configuration. They are insufficient for agentic AI due to its non-deterministic and autonomous nature.

The Scoring Approach: AIVSS builds upon CVSS by adding an agent AI risk factor to account for non-deterministic risks. This factor considers the agent’s level of autonomy (ranging from non-existent to full autonomy), as different levels present varying risk factors.

Framework Components: AIVSS offers a quantitative, numerical score. It is being developed alongside a qualitative, decision-matrix-based system called SSVC (Stakeholder-Specific Vulnerability Categorization).

The Shortcomings of Traditional IAM for AI Agents

Ken asserted that traditional Identity and Access Management (IAM) systems, such as OAuth and SAML, are fundamentally inadequate for securing AI agents. These legacy standards were designed for web applications acting on a human’s behalf.

Session-Scoped vs. Task-Scoped: The primary issue is that current OAuth flows are session-scoped (time-based) and grant access that is additive upon request. Agents, however, require dynamic, fine-grained access that is strictly task-scoped. Access should be removed once a task is finished, requiring a new permission request for subsequent tasks.

Coarse-Grained Access: Traditional IAM is often either too restrictive, stifling the agent’s necessary agency, or too coarse-grained. For instance, an HR agent might need access to a resume database but should be restricted from the salary database; granting the full human identity is too risky.

Multi-Agent Complexity: Current systems struggle to accommodate multi-agent systems, which are key to future AI workflows. In these environments, different agents assume different identities, and access must be managed with a dynamic task scope.

The Way Forward: A new standard is necessary. This standard must allow for agency while maintaining security by consistently checking the agent’s intent before granting access.

Securing Agent-to-Agent (A2A) Communication

The rise of agent development kits (ADKs) and A2A protocols (like Google’s A2A protocol) introduces new security challenges beyond those seen in traditional API security.

Beyond BOLA: While standard API issues like BOLA (Broken Object Level Authorization) still exist, A2A communication requires systems to handle issues like trust, capability, and quality of service. Agents must be protected from risks like poisoned agent cards or rug pull attacks.

New Protocols: Ken emphasized the need for protocols like the Agent Capability Negotiation and Binding Protocol (ACNBP). This protocol facilitates validation using digital signatures to ensure the agent possesses the capabilities and quality of service it claims.

Goal Manipulation Attacks: A major threat to autonomous systems is goal manipulation, which is challenging to defend against. This includes attacks like Drifting (Crescendo Attack) - Gradually shifting the agent’s intended goal (e.g., prompting a security agent to open ports instead of locking them), Malicious Goal Expansion - Using prompt injection to force an agent to execute its assigned task while also performing a malicious secondary task, such as leaking secret environment variables and Exhaustion Loop - Using direct or indirect prompt injection to make the agent perform a task that never completes, leading to a denial of service or a “denial of wallet”.

Security professionals were encouraged to engage in research-oriented learning and contribute to these evolving standards to keep pace with the rapidly innovating field of AI security.

Scaling Product Security In The AI Era with Teja Myneedu

In this conversation, Teja noted that his transition from focusing purely on product and application security to leading broader security teams provided a crucial worldview: securing products extends beyond the boundary of writing code and affects the entire enterprise.

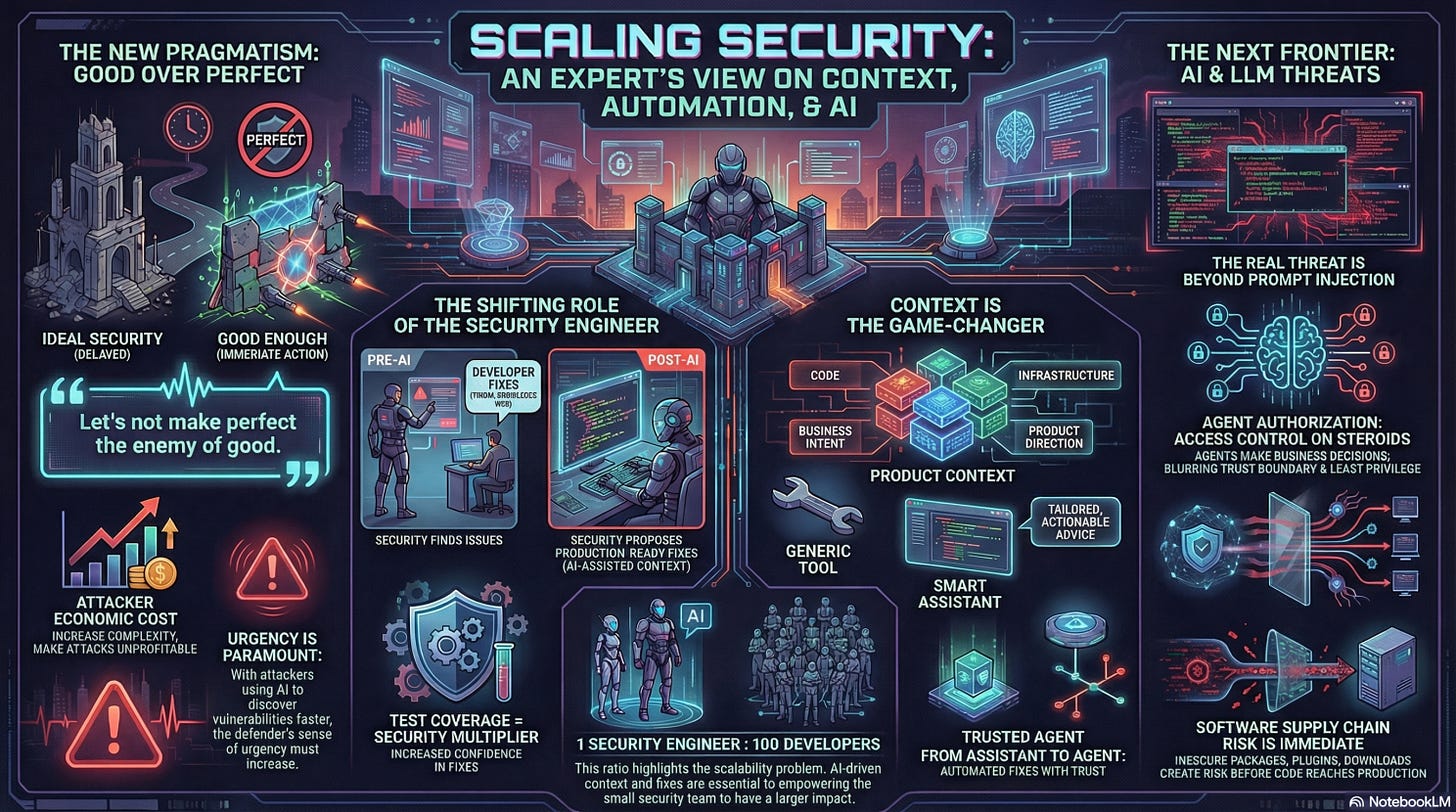

Security Philosophy and Prioritization

A key evolution in Teja’s philosophy centers on practicality and urgency. He believes security breaches often occur because organizations failed to do the hard work of tightening access or addressing individual vulnerabilities, rather than failing to find the next cool thing.

Security by Obscurity: Teja emphasized that he is willing to accept incremental steps toward security improvement, asserting, “Let’s not make perfect the enemy of good“. While acknowledging the historical debate around “security by obscurity,” he argued that any measure (such as implementing a WAF rule) that improves security by even 1% or 2% daily is valuable. Given that bad actors are increasingly using AI agents to explore attack surface areas, the sense of urgency necessitates immediately plugging the bleeding rather than waiting weeks for an ideal fix. The goal should be to increase the economic complexity of an attack for bad actors.

Risk Prioritization: The discussion touched on the challenge of risk prioritization. Teja noted the dilemma between presenting the full scope of vulnerabilities (which can feel overwhelming) and prioritizing risks. However, all prioritization is inherently flawed and only necessary when resources prevent fixing everything. Security tools often fail at prioritization because they lack necessary context regarding people, processes, and organizational strategy.

AI, Context, and the Future of Fixing

The conversation explored how AI and automation are changing the role of security teams, particularly concerning code fixes. Traditionally, security teams manage vulnerabilities, while developers own the fixing.

Security Engineers as Fixers: We discussed whether security engineers should raise PRs for code fixes. I mentioned that security engineers should know how to fix vulnerabilities and can now use AI to easily propose PRs for engineers to approve or reject. Teja added a crucial nuance: the problem isn’t the technical fix itself, but ensuring the fix doesn’t cause unintended downstream effects (like authorization changes breaking service-to-service calls), which relies heavily on tribal knowledge within the engineering teams.

The Power of Context: AI’s promise lies in reducing the cognitive load on engineers by helping them discover context quickly, serving as “product archaeologists”. Critical product context includes the code repository and deployment infrastructure. The harder aspects of context to capture include team ownership (especially after reorgs), business intent, use cases, and priority. The vendors’ ability to gather and contextualize organizational constraints is the “game changer” for security tooling.

Secure Design and Emerging Threats

Secure by Design vs. Secure Defaults: Secure by Design requires clear architecture and the application of standard security practices. While AI increases the promise of applying known security patterns consistently, we discussed that the term “secure by design” has become so broad it has lost meaningful definition, often encompassing “all of security”. The critical distinction lies between secure design (before building) and secure defaults (implementation).

LLM Novel Threats: Beyond known issues like prompt injection, Teja views the biggest threat as the complexity of identity and authorization. When agents are integrated, they dynamically determine business logic and act as decision-making engines, blurring trust boundaries. This compounds the already difficult problem of access control in microservices. The challenge is granting an agent delegate access with appropriate, limited privileges. Teja also expressed heightened concern over the enterprise environment, particularly the software supply chain risk associated with browser plugins and insecure desktop downloads.

The links to both the episodes are provided in the Appendix below.

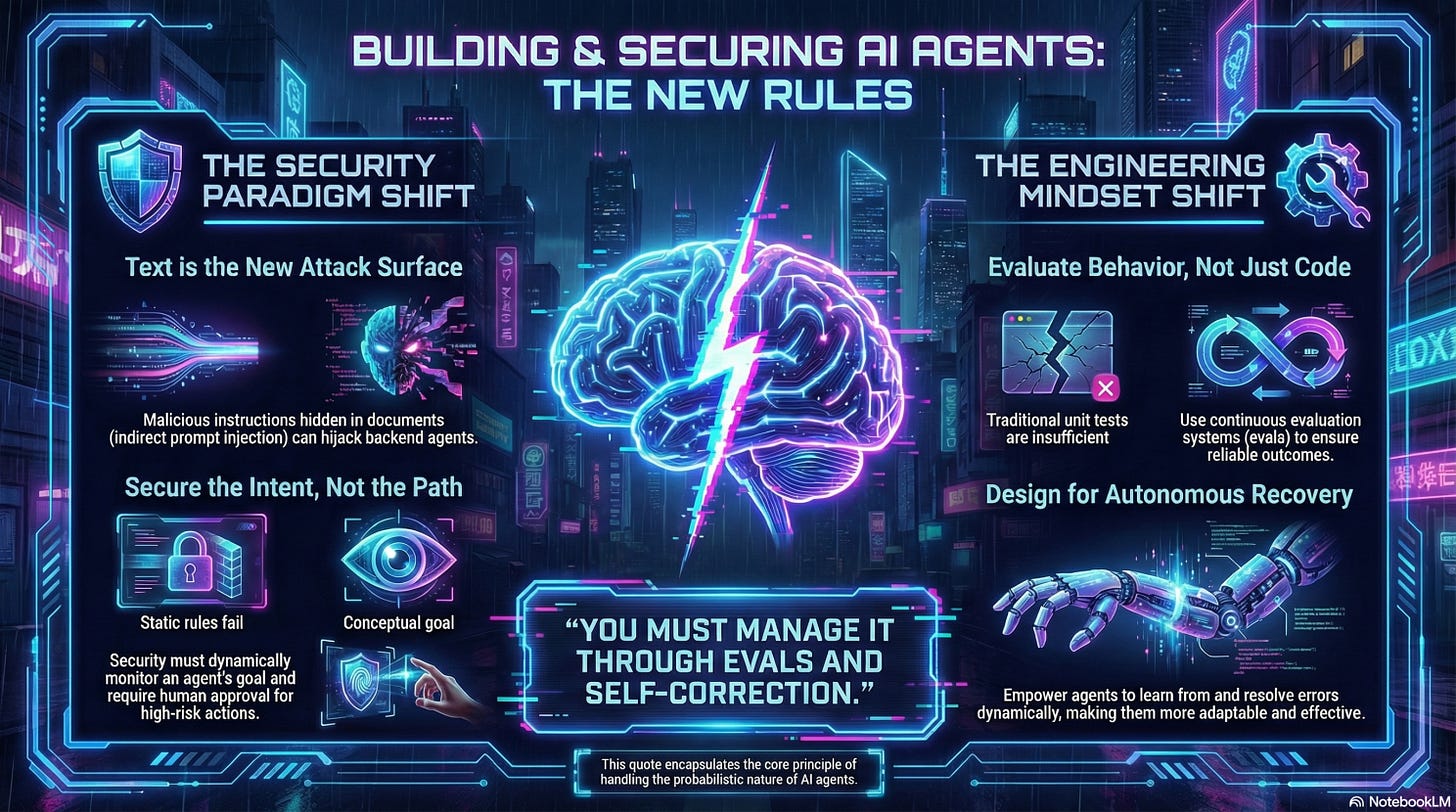

On Secure Agent Engineering

I read a blog recently that describes the difference between traditional software engineering and agent engineering, and why senior engineers struggle to build AI agents because they try to code away the probabilistic nature of agents as opposed to embracing its nature. This blog touched upon some key points that resonated well with me. I highly recommend giving it a read.

If I were to draw parallels from this blog to security engineering, below are some quick off the top of my head thoughts on the points mentioned in the blog. Please note that the below points are not exhaustive by any means:

Text is the new State - The fact that a lot of nuance required in general engineering gets lost with data structures, are now possible to be fed via prompts. And, agents have a tendency to pay attention to them. This is essentially a breeding ground for prompt injection attacks. Prompt Injection at large still hasn’t been solved with folks still trying to settle the debate whether its a vulnerability or not. In a chatbot application, where the very nature of the app is to take user input via prompt and respond back, there could be a middleware or some kind of a proxy/filter that can look for prompt injection attacks as a defensive measure but imo, the greater risk is that with something like an indirect prompt injection - where in, the main functionality of an app could be something innocuous, for example, to upload files. But, if the app uses some kind of an AI engine in the backend to process these docs (that might contain hidden malicious prompts), it could result in catastrophic outcomes that weren’t obvious. My prediction is that direct/indirect prompt injection will continue to be a major pain when it comes to agentic apps/systems. The only way to manage this risk is to follow a defense in depth strategy, reducing blast radius, and following general security principles of implementing secure defaults, least privilege and having good observability in place. In order to defend against these attacks, the CaMeL approach looks promising but time will tell how effective and scaleable it will be.

Agent Intent - There could be multiple ways of getting to an outcome and we as humans might not know about all of them. So, instead of hardcoding them and restricting the agents, we should really focus more on the agents intent and the outcome and let the agent decide how they want to get to that. We can set meaningful milestones to ensure the trajectory is correct but restricting the agents by coding in all the edge cases is really not building an effective agentic system. Having said that, this is a security nightmare because protecting probabilistic outcomes is not trivial. Security needs to focus on the intent of the agent before taking any step - what its trying to do, what permissions it has, what systems it is connected to, etc. If its a high risk action, getting a human to approve it are going to be table stakes. The dynamic and adaptable nature of security guardrails/policies is what is needed in the agentic AI space. The traditional rules based policy engines aren’t going to cut it unfortunately.

Error handling - Agents can operate autonomously so giving them the agency to take errors and resolve them dynamically, instead of failing the entire workflows is the way to build effective autonomous agentic systems. Lets consider this from a DAST (Dynamic Application Security Testing) perspective - Imagine you are building a DAST agent. By nature, a DAST agent needs to send a bunch of payloads to its target, observe the response and deduce whether something is a vulnerability or not. This is how traditional DAST scanners have worked. No dynamic decisions are made based on the applications behavior. In the AI era now, imo - there is a lot of room for improvement in such scenarios. For example, depending upon the errors received in the response, the DAST agent can adapt dynamically and continue probing the target more effectively instead of simply spraying and praying. This will also address the DDOS type attacks by such scanners because there won’t be a need to throw a bunch of non-relevant payloads at a target and bring them down. Making your agents smart, adaptable and stealthy will really test the efficacy of security controls. Having said that, one important point worth mentioning here is to enable an agent to self correct/adapt in a sandboxed environment. You don’t want to give your DAST agent the permission to access the file system, only to realize that it rm -rf’ed itself, while trying to fix something.

Evaluating behavior or testing probabilistic systems - In agentic systems, unit tests just aren’t enough. Reliability, Quality and Tracing are key things to evaluate agentic behavior against. Let me explain this by using my own experience of building a vulnerability triage agent - I was flummoxed by its outcome because it was different every time I ran it. I wasn’t sure how to improve it because it wasn’t like the variance in the outcome was acceptable. It was basically true positive on one run and false positive on the next. And, without the AI/ML background, I had no idea how to build evals to actually make this triage agent work reliably. I started thinking from first principles. I started using the outcome from each run and fed it back to the AI to help me understand how I could improve the prompt so that the outcome was consistent and aligned with what I’d expect it to be. The AI would suggest some changes like adding logs at different points where the agent was making decisions. I’d look at the suggestions, make minor improvements and implement them. I’d then re-run the workflow and see if the outcome changed - whether it got better or worse. I was really prompt engineering at this point. Soon enough, I started seeing consistent outcomes from the agent. I could trust it, the quality of the reasoning was solid and I had traces of every action/decision the agent was making. I didn’t realize that I was unintentionally building some sort of an eval system manually where I had an input (vulnerability data), the expected output (human triage of the vulnerability whether a TP or a FP) and a prompt (context) that I could use AI to help improve to get to the desired outcome. Simple approaches like this are often lost in the hype cycle, but when you actually stick to first principles, it all makes sense.

Implicit vs Explicit Context - As humans, we have a lot of tribal knowledge and assumptions/perceptions of the world i.e. implicit context. In software engineering, this translates to things like variable / function / tool naming, etc. But, more often than not, we don’t do a good enough job of this nomenclature, and keep it ambiguous. Agents work differently. The more accurate context we provide them, the less ambiguity we leave upto them to decipher, and the better outcomes we are going to see. Another way to think about this is that traditional APIs are also not adaptable in the sense that it expects a pre-defined input and has specified output formats. Its too restrictive. Agents, on the other hand have the capability to adapt during runtime by reading tool definitions and adjusting the inputs accordingly. MCP tool definitions are a great example here. A tool having a wrong docstring definition or an incomplete name could lead to wrong invocations resulting in unintended consequences. Removing the ambiguity and making it dead simple for agents is a recipe to build reliable and secure agentic systems.

I feel that the quote below from the blog sums it up pretty well, where “it” refers to the probabilistic nature of agents.

“You must manage it through evals and self-correction.”

SecureVibes Update

For those who might not know, I open sourced a project called “SecureVibes” that is meant to help vibecoders find security vulnerabilities in their codebase using a slightly different approach as compared to traditional tools. You can read more about it here. The project is on my Github here. Below are some updates on it:

Mahmud Muhammad gave a presentation at Devfest Llorin and demo’ed SecureVibes. Here is the link to this deck.

I got an opportunity to present SecureVibes at a local AI Tinkerers Meetup in San Diego. The community loved it and it was voted the community favorite and was also featured in their newsletter here.

Shoutout to Yogi Kortisa and Kolla Harish for contributing to its code. We are going to keep improving it and learning from it, as we continue to operate in this greenfield area. Watch out for this space as we will share new learnings here. If you’d like to help contribute to it, have questions or simply interested in following along our journey, please feel free to join the Discord server below.